Thales’s Friendly Hackers unit invents metamodel to detect AI-generated deepfake images – Africa.com

- As part of a challenge organized by the French Defense Innovation Agency (AID) to detect images created by today’s AI platforms, the team at Thales AI accelerator cortAIx developed a meta-model capable of detecting AI-generated deepfakes.

- The Thales metamodel is built on an aggregation of models, each of which assigns a authenticity score to an image to determine whether it is real or fake.

- Human-generated, AI-generated image, video, and audio content are increasingly used for the purposes of disinformation, manipulation, and identity fraud.

Meudon, France, November 22, 2024 -/African Media Agency (AMA)/- Artificial intelligence is the central theme of this year’s European Cyber Week, taking place in Rennes, Brittany from 19 to 21 November. In a challenge organized by the French Defense Innovation Agency (AID), the Thales team has successfully developed a meta-model for detecting artificial intelligence-generated images. As the use of artificial intelligence technology becomes increasingly popular, and at a time when disinformation becomes more prevalent in the media and affects various sectors of the economy, deepfake detection meta-models provide a way to combat it across a wide range of use cases. Methods of image manipulation, for example as part of the fight against identity fraud.

AI-generated images are created using AI platforms such as Midjourney, Dall-E and Firefly. Some studies predict that the use of deepfakes for identity theft and fraud could cause huge economic losses within a few years. Gartner estimates that about 20% of cyberattacks in 2023 may contain deepfakes as part of disinformation and manipulation campaigns. Their report highlights the increasing use of deepfakes in financial fraud and advanced phishing attacks.

“Thales’ meta-model for deepfake detection tackles identity fraud and shape-shifting technologies,” explain Christopher Meyer, Senior artificial intelligence expert, cortAIx CTO, Thales’ AI accelerator. “Aggregating multiple methods using neural networks, noise detection, and spatial frequency analysis can help us better secure the growing number of solutions that require biometric identity checks. This is a significant technological advance and a testament to the expertise of Thales AI researchers.“

Thales Metamodel analyzes the authenticity of images using machine learning techniques, decision trees, and an evaluation of the strengths and weaknesses of each model. It combines various models including:

- The CLIP method (Contrastive Language-Image Pre-training) involves connecting images and text by learning a common representation. To detect deepfakes, CLIP methods analyze images and compare them with textual descriptions to identify inconsistencies and visual artifacts.

- The DNF (Diffusion Noise Feature) method uses current image generation architectures, called diffusion models, to detect deepfakes. Diffusion models create content from scratch based on an estimate of the amount of noise added to an image to cause an “illusion,” and this estimate can in turn be used to detect whether the image was generated by artificial intelligence.

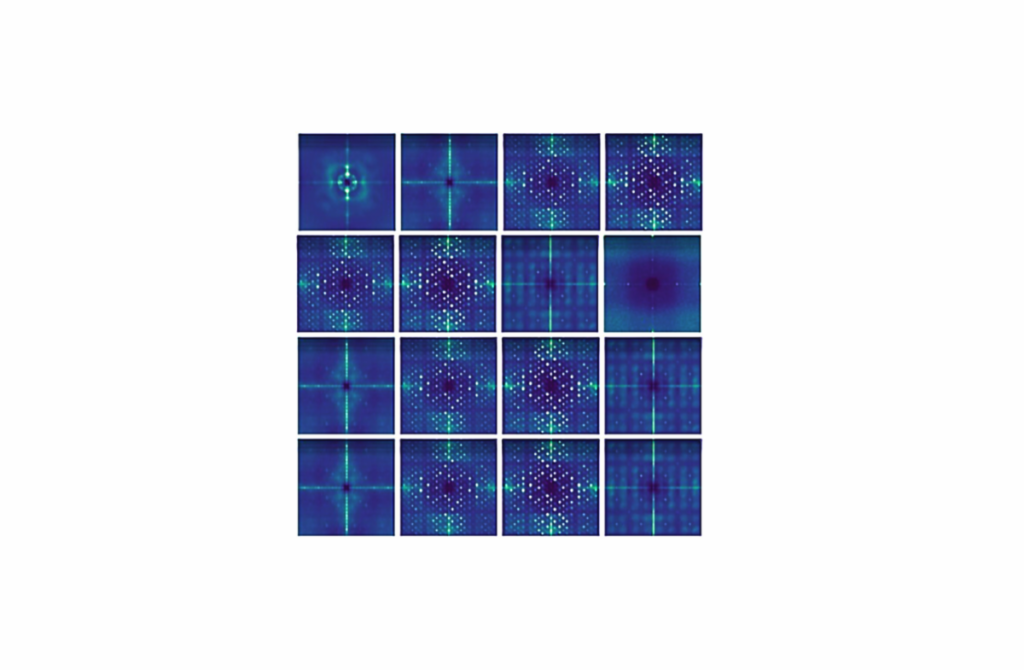

- The DCT (Discrete Cosine Transform) method of deepfake detection analyzes the spatial frequencies of images to discover hidden artifacts. By converting images from the spatial domain (pixels) to the frequency domain, DCT can detect subtle anomalies in the image structure that occur when deepfakes are generated and are often invisible to the naked eye.

The Thales team behind the invention is part of the group’s artificial intelligence accelerator cortAIx, which has more than 600 artificial intelligence researchers and engineers, 150 of whom work at the Saclay research and technology cluster south of Paris on key The task system works. The Friendship Hackers team has developed a toolbox called BattleBox to help assess the robustness of AI systems against attacks designed to exploit inherent vulnerabilities in different AI models, including large language models, such as adversarial attacks and attempts to extract sensitive information . To combat these attacks, the team developed advanced countermeasures such as cancellation learning, federated learning, model watermarking, and model hardening.

In 2023, Thales demonstrates its expertise during the show CapitaLand Challenge (Conference on Artificial Intelligence for Defense), organized by the French Defense Procurement Agency (DGA), involves finding AI training data, even if it has been removed from systems to protect confidentiality.

Distributor African Media Agency (AMA) On behalf of Thales.

About Thales

Thales (Euronext Paris: HO) is a global leader in advanced technologies focused on three business areas: Defense & Security, Aerospace and Cybersecurity & Digital Identity.

The group develops products and solutions that help make the world a safer, greener and more inclusive place.

Thales invests nearly 4 billion euros in R&D every year, especially in key innovation areas such as IA, cybersecurity, quantum technology, cloud technology and 6G.

Thales has 81,000 employees in 68 countries. In 2023, the group’s sales reached 18.4 billion euros.

Media Contact

Thales, media relations

pressroom@thalesgroup.com

Post Thales’ friendly hacking division invents meta-model to detect AI-generated deepfake images first appeared in african media agency.